mirror of

https://github.com/voson-wang/toon.git

synced 2026-01-29 23:34:10 +08:00

test: refactor accuracy benchmark generation

This commit is contained in:

21

README.md

21

README.md

@@ -31,6 +31,13 @@ users[2]{id,name,role}:

|

|||||||

2,Bob,user

|

2,Bob,user

|

||||||

```

|

```

|

||||||

|

|

||||||

|

<details>

|

||||||

|

<summary>Another reason</summary>

|

||||||

|

|

||||||

|

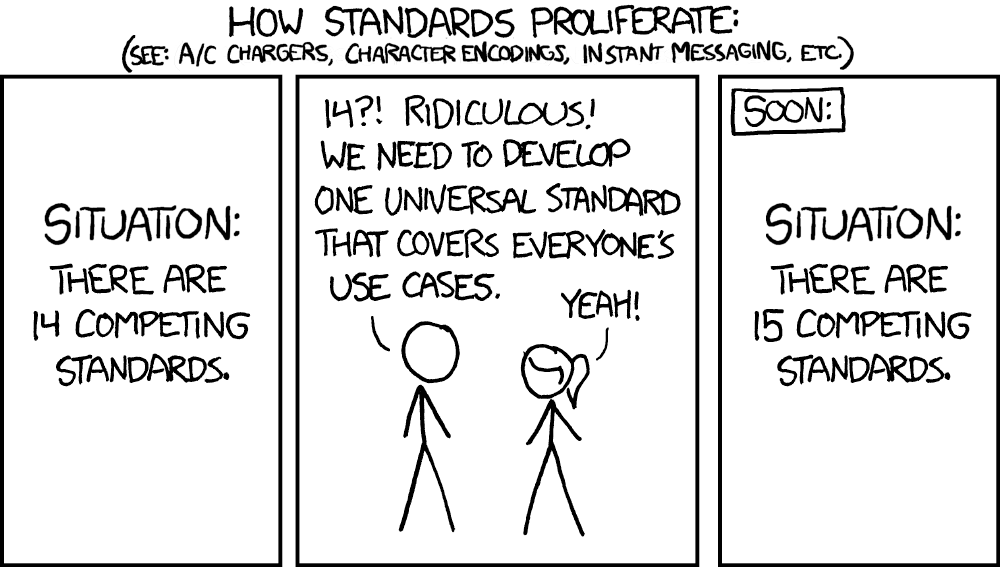

[](https://xkcd.com/927/)

|

||||||

|

|

||||||

|

</details>

|

||||||

|

|

||||||

> [!NOTE]

|

> [!NOTE]

|

||||||

> I built TOON to save tokens when sending large datasets to LLMs at work, where I tend to have uniform arrays of objects that benefit from the tabular format.

|

> I built TOON to save tokens when sending large datasets to LLMs at work, where I tend to have uniform arrays of objects that benefit from the tabular format.

|

||||||

|

|

||||||

@@ -225,7 +232,7 @@ claude-haiku-4-5

|

|||||||

##### Uniform employee records (TOON optimal format)

|

##### Uniform employee records (TOON optimal format)

|

||||||

|

|

||||||

| Format | Accuracy | Tokens | Correct/Total |

|

| Format | Accuracy | Tokens | Correct/Total |

|

||||||

|--------|----------|--------|---------------|

|

| ------ | -------- | ------ | ------------- |

|

||||||

| `toon` | 86.2% | 2.483 | 100/116 |

|

| `toon` | 86.2% | 2.483 | 100/116 |

|

||||||

| `csv` | 80.2% | 2.337 | 93/116 |

|

| `csv` | 80.2% | 2.337 | 93/116 |

|

||||||

| `yaml` | 82.8% | 4.969 | 96/116 |

|

| `yaml` | 82.8% | 4.969 | 96/116 |

|

||||||

@@ -235,7 +242,7 @@ claude-haiku-4-5

|

|||||||

##### E-commerce orders with nested structures

|

##### E-commerce orders with nested structures

|

||||||

|

|

||||||

| Format | Accuracy | Tokens | Correct/Total |

|

| Format | Accuracy | Tokens | Correct/Total |

|

||||||

|--------|----------|--------|---------------|

|

| ------ | -------- | ------ | ------------- |

|

||||||

| `toon` | 90.9% | 5.967 | 80/88 |

|

| `toon` | 90.9% | 5.967 | 80/88 |

|

||||||

| `csv` | 90.9% | 6.735 | 80/88 |

|

| `csv` | 90.9% | 6.735 | 80/88 |

|

||||||

| `yaml` | 89.8% | 7.328 | 79/88 |

|

| `yaml` | 89.8% | 7.328 | 79/88 |

|

||||||

@@ -245,17 +252,17 @@ claude-haiku-4-5

|

|||||||

##### Time-series analytics data

|

##### Time-series analytics data

|

||||||

|

|

||||||

| Format | Accuracy | Tokens | Correct/Total |

|

| Format | Accuracy | Tokens | Correct/Total |

|

||||||

|--------|----------|--------|---------------|

|

| ------ | -------- | ------ | ------------- |

|

||||||

| `csv` | 87.9% | 1.393 | 51/58 |

|

| `csv` | 87.9% | 1.393 | 51/58 |

|

||||||

| `toon` | 86.2% | 1.515 | 50/58 |

|

| `toon` | 86.2% | 1.515 | 50/58 |

|

||||||

| `yaml` | 86.2% | 2.938 | 50/58 |

|

| `yaml` | 86.2% | 2.938 | 50/58 |

|

||||||

| `json` | 87.9% | 3.665 | 51/58 |

|

| `json` | 87.9% | 3.665 | 51/58 |

|

||||||

| `markdown-kv` | 86.2% | 3.779 | 50/58 |

|

| `markdown-kv` | 86.2% | 3.779 | 50/58 |

|

||||||

|

|

||||||

##### Popular GitHub repositories

|

##### Top 100 GitHub repositories

|

||||||

|

|

||||||

| Format | Accuracy | Tokens | Correct/Total |

|

| Format | Accuracy | Tokens | Correct/Total |

|

||||||

|--------|----------|--------|---------------|

|

| ------ | -------- | ------ | ------------- |

|

||||||

| `csv` | 80.4% | 8.513 | 45/56 |

|

| `csv` | 80.4% | 8.513 | 45/56 |

|

||||||

| `toon` | 80.4% | 8.745 | 45/56 |

|

| `toon` | 80.4% | 8.745 | 45/56 |

|

||||||

| `yaml` | 78.6% | 13.129 | 44/56 |

|

| `yaml` | 78.6% | 13.129 | 44/56 |

|

||||||

@@ -267,7 +274,7 @@ claude-haiku-4-5

|

|||||||

##### gpt-5-nano

|

##### gpt-5-nano

|

||||||

|

|

||||||

| Format | Accuracy | Correct/Total |

|

| Format | Accuracy | Correct/Total |

|

||||||

|--------|----------|---------------|

|

| ------ | -------- | ------------- |

|

||||||

| `toon` | 97.5% | 155/159 |

|

| `toon` | 97.5% | 155/159 |

|

||||||

| `markdown-kv` | 95.6% | 152/159 |

|

| `markdown-kv` | 95.6% | 152/159 |

|

||||||

| `yaml` | 94.3% | 150/159 |

|

| `yaml` | 94.3% | 150/159 |

|

||||||

@@ -277,7 +284,7 @@ claude-haiku-4-5

|

|||||||

##### claude-haiku-4-5

|

##### claude-haiku-4-5

|

||||||

|

|

||||||

| Format | Accuracy | Correct/Total |

|

| Format | Accuracy | Correct/Total |

|

||||||

|--------|----------|---------------|

|

| ------ | -------- | ------------- |

|

||||||

| `markdown-kv` | 76.7% | 122/159 |

|

| `markdown-kv` | 76.7% | 122/159 |

|

||||||

| `toon` | 75.5% | 120/159 |

|

| `toon` | 75.5% | 120/159 |

|

||||||

| `json` | 75.5% | 120/159 |

|

| `json` | 75.5% | 120/159 |

|

||||||

|

|||||||

File diff suppressed because it is too large

Load Diff

@@ -28,7 +28,7 @@ claude-haiku-4-5

|

|||||||

##### Uniform employee records (TOON optimal format)

|

##### Uniform employee records (TOON optimal format)

|

||||||

|

|

||||||

| Format | Accuracy | Tokens | Correct/Total |

|

| Format | Accuracy | Tokens | Correct/Total |

|

||||||

|--------|----------|--------|---------------|

|

| ------ | -------- | ------ | ------------- |

|

||||||

| `toon` | 86.2% | 2.483 | 100/116 |

|

| `toon` | 86.2% | 2.483 | 100/116 |

|

||||||

| `csv` | 80.2% | 2.337 | 93/116 |

|

| `csv` | 80.2% | 2.337 | 93/116 |

|

||||||

| `yaml` | 82.8% | 4.969 | 96/116 |

|

| `yaml` | 82.8% | 4.969 | 96/116 |

|

||||||

@@ -38,7 +38,7 @@ claude-haiku-4-5

|

|||||||

##### E-commerce orders with nested structures

|

##### E-commerce orders with nested structures

|

||||||

|

|

||||||

| Format | Accuracy | Tokens | Correct/Total |

|

| Format | Accuracy | Tokens | Correct/Total |

|

||||||

|--------|----------|--------|---------------|

|

| ------ | -------- | ------ | ------------- |

|

||||||

| `toon` | 90.9% | 5.967 | 80/88 |

|

| `toon` | 90.9% | 5.967 | 80/88 |

|

||||||

| `csv` | 90.9% | 6.735 | 80/88 |

|

| `csv` | 90.9% | 6.735 | 80/88 |

|

||||||

| `yaml` | 89.8% | 7.328 | 79/88 |

|

| `yaml` | 89.8% | 7.328 | 79/88 |

|

||||||

@@ -48,17 +48,17 @@ claude-haiku-4-5

|

|||||||

##### Time-series analytics data

|

##### Time-series analytics data

|

||||||

|

|

||||||

| Format | Accuracy | Tokens | Correct/Total |

|

| Format | Accuracy | Tokens | Correct/Total |

|

||||||

|--------|----------|--------|---------------|

|

| ------ | -------- | ------ | ------------- |

|

||||||

| `csv` | 87.9% | 1.393 | 51/58 |

|

| `csv` | 87.9% | 1.393 | 51/58 |

|

||||||

| `toon` | 86.2% | 1.515 | 50/58 |

|

| `toon` | 86.2% | 1.515 | 50/58 |

|

||||||

| `yaml` | 86.2% | 2.938 | 50/58 |

|

| `yaml` | 86.2% | 2.938 | 50/58 |

|

||||||

| `json` | 87.9% | 3.665 | 51/58 |

|

| `json` | 87.9% | 3.665 | 51/58 |

|

||||||

| `markdown-kv` | 86.2% | 3.779 | 50/58 |

|

| `markdown-kv` | 86.2% | 3.779 | 50/58 |

|

||||||

|

|

||||||

##### Popular GitHub repositories

|

##### Top 100 GitHub repositories

|

||||||

|

|

||||||

| Format | Accuracy | Tokens | Correct/Total |

|

| Format | Accuracy | Tokens | Correct/Total |

|

||||||

|--------|----------|--------|---------------|

|

| ------ | -------- | ------ | ------------- |

|

||||||

| `csv` | 80.4% | 8.513 | 45/56 |

|

| `csv` | 80.4% | 8.513 | 45/56 |

|

||||||

| `toon` | 80.4% | 8.745 | 45/56 |

|

| `toon` | 80.4% | 8.745 | 45/56 |

|

||||||

| `yaml` | 78.6% | 13.129 | 44/56 |

|

| `yaml` | 78.6% | 13.129 | 44/56 |

|

||||||

@@ -70,7 +70,7 @@ claude-haiku-4-5

|

|||||||

##### gpt-5-nano

|

##### gpt-5-nano

|

||||||

|

|

||||||

| Format | Accuracy | Correct/Total |

|

| Format | Accuracy | Correct/Total |

|

||||||

|--------|----------|---------------|

|

| ------ | -------- | ------------- |

|

||||||

| `toon` | 97.5% | 155/159 |

|

| `toon` | 97.5% | 155/159 |

|

||||||

| `markdown-kv` | 95.6% | 152/159 |

|

| `markdown-kv` | 95.6% | 152/159 |

|

||||||

| `yaml` | 94.3% | 150/159 |

|

| `yaml` | 94.3% | 150/159 |

|

||||||

@@ -80,7 +80,7 @@ claude-haiku-4-5

|

|||||||

##### claude-haiku-4-5

|

##### claude-haiku-4-5

|

||||||

|

|

||||||

| Format | Accuracy | Correct/Total |

|

| Format | Accuracy | Correct/Total |

|

||||||

|--------|----------|---------------|

|

| ------ | -------- | ------------- |

|

||||||

| `markdown-kv` | 76.7% | 122/159 |

|

| `markdown-kv` | 76.7% | 122/159 |

|

||||||

| `toon` | 75.5% | 120/159 |

|

| `toon` | 75.5% | 120/159 |

|

||||||

| `json` | 75.5% | 120/159 |

|

| `json` | 75.5% | 120/159 |

|

||||||

|

|||||||

@@ -61,7 +61,7 @@

|

|||||||

},

|

},

|

||||||

{

|

{

|

||||||

"name": "github",

|

"name": "github",

|

||||||

"description": "Popular GitHub repositories"

|

"description": "Top 100 GitHub repositories"

|

||||||

}

|

}

|

||||||

],

|

],

|

||||||

"tokenCounts": {

|

"tokenCounts": {

|

||||||

@@ -86,5 +86,5 @@

|

|||||||

"yaml-analytics": 2938,

|

"yaml-analytics": 2938,

|

||||||

"yaml-github": 13129

|

"yaml-github": 13129

|

||||||

},

|

},

|

||||||

"timestamp": "2025-10-27T12:43:38.288Z"

|

"timestamp": "2025-10-27T13:04:50.634Z"

|

||||||

}

|

}

|

||||||

|

|||||||

@@ -81,6 +81,7 @@ else {

|

|||||||

|

|

||||||

// Format datasets once (reuse for all questions)

|

// Format datasets once (reuse for all questions)

|

||||||

const formattedDatasets: Record<string, Record<string, string>> = {}

|

const formattedDatasets: Record<string, Record<string, string>> = {}

|

||||||

|

|

||||||

for (const [formatName, formatter] of Object.entries(formatters)) {

|

for (const [formatName, formatter] of Object.entries(formatters)) {

|

||||||

formattedDatasets[formatName] ??= {}

|

formattedDatasets[formatName] ??= {}

|

||||||

|

|

||||||

@@ -91,6 +92,7 @@ else {

|

|||||||

|

|

||||||

// Generate evaluation tasks

|

// Generate evaluation tasks

|

||||||

const tasks: { question: Question, formatName: string, modelName: string }[] = []

|

const tasks: { question: Question, formatName: string, modelName: string }[] = []

|

||||||

|

|

||||||

for (const question of questions) {

|

for (const question of questions) {

|

||||||

for (const [formatName] of Object.entries(formatters)) {

|

for (const [formatName] of Object.entries(formatters)) {

|

||||||

for (const [modelName] of Object.entries(activeModels)) {

|

for (const [modelName] of Object.entries(activeModels)) {

|

||||||

@@ -100,7 +102,6 @@ else {

|

|||||||

}

|

}

|

||||||

|

|

||||||

const total = tasks.length

|

const total = tasks.length

|

||||||

|

|

||||||

consola.start(`Running ${total} evaluations with concurrency: ${DEFAULT_CONCURRENCY}`)

|

consola.start(`Running ${total} evaluations with concurrency: ${DEFAULT_CONCURRENCY}`)

|

||||||

|

|

||||||

// Evaluate all tasks in parallel

|

// Evaluate all tasks in parallel

|

||||||

@@ -110,16 +111,15 @@ else {

|

|||||||

const formattedData = formattedDatasets[task.formatName]![task.question.dataset]!

|

const formattedData = formattedDatasets[task.formatName]![task.question.dataset]!

|

||||||

const model = activeModels[task.modelName as keyof typeof activeModels]!

|

const model = activeModels[task.modelName as keyof typeof activeModels]!

|

||||||

|

|

||||||

const result = await evaluateQuestion(

|

const result = await evaluateQuestion({

|

||||||

task.question,

|

question: task.question,

|

||||||

task.formatName,

|

formatName: task.formatName,

|

||||||

formattedData,

|

formattedData,

|

||||||

model,

|

model,

|

||||||

task.modelName,

|

})

|

||||||

)

|

|

||||||

|

|

||||||

// Progress update

|

// Progress update after task completes

|

||||||

if ((index + 1) % 10 === 0) {

|

if ((index + 1) % 10 === 0 || (index + 1) === total) {

|

||||||

const percent = (((index + 1) / total) * 100).toFixed(1)

|

const percent = (((index + 1) / total) * 100).toFixed(1)

|

||||||

consola.start(`Progress: ${index + 1}/${total} (${percent}%)`)

|

consola.start(`Progress: ${index + 1}/${total} (${percent}%)`)

|

||||||

}

|

}

|

||||||

@@ -133,6 +133,7 @@ else {

|

|||||||

}

|

}

|

||||||

|

|

||||||

// Generate/regenerate markdown report

|

// Generate/regenerate markdown report

|

||||||

|

consola.start('Generating report and saving results…')

|

||||||

const formatResults = calculateFormatResults(results, tokenCounts)

|

const formatResults = calculateFormatResults(results, tokenCounts)

|

||||||

await saveResults(results, formatResults, questions, tokenCounts)

|

await saveResults(results, formatResults, questions, tokenCounts)

|

||||||

|

|

||||||

|

|||||||

@@ -46,7 +46,7 @@ const BENCHMARK_EXAMPLES = [

|

|||||||

{

|

{

|

||||||

name: 'E-commerce Order',

|

name: 'E-commerce Order',

|

||||||

emoji: '🛒',

|

emoji: '🛒',

|

||||||

description: 'Nested order with customer and items',

|

description: 'Single nested order with customer and items',

|

||||||

getData: generateOrder,

|

getData: generateOrder,

|

||||||

showDetailed: false,

|

showDetailed: false,

|

||||||

},

|

},

|

||||||

|

|||||||

@@ -5,8 +5,9 @@ export const ROOT_DIR: string = url.fileURLToPath(new URL('../../', import.meta.

|

|||||||

export const BENCHMARKS_DIR: string = url.fileURLToPath(new URL('../', import.meta.url))

|

export const BENCHMARKS_DIR: string = url.fileURLToPath(new URL('../', import.meta.url))

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Benchmark execution configuration

|

* Default concurrency for parallel evaluations

|

||||||

*/

|

*/

|

||||||

|

export const DEFAULT_CONCURRENCY = 20

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Enable dry run mode for quick testing with limited AI requests

|

* Enable dry run mode for quick testing with limited AI requests

|

||||||

@@ -27,13 +28,3 @@ export const DRY_RUN_LIMITS = {

|

|||||||

/** Models to use in dry run */

|

/** Models to use in dry run */

|

||||||

allowedModels: [] as string[],

|

allowedModels: [] as string[],

|

||||||

}

|

}

|

||||||

|

|

||||||

/**

|

|

||||||

* Default concurrency for parallel evaluations

|

|

||||||

*/

|

|

||||||

export const DEFAULT_CONCURRENCY = 20

|

|

||||||

|

|

||||||

/**

|

|

||||||

* Delay between API requests to avoid rate limiting (in milliseconds)

|

|

||||||

*/

|

|

||||||

export const RATE_LIMIT_DELAY_MS = 100

|

|

||||||

|

|||||||

@@ -122,16 +122,16 @@ const analyticsDataset: Dataset = {

|

|||||||

}

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* GitHub dataset: Popular repositories

|

* Real-world dataset: Top 100 starred GitHub repositories

|

||||||

*

|

*

|

||||||

* @remarks

|

* @remarks

|

||||||

* Tests TOON's tabular format with real-world data

|

* Tests TOON's tabular format

|

||||||

*/

|

*/

|

||||||

const githubDataset: Dataset = {

|

const githubDataset: Dataset = {

|

||||||

name: 'github',

|

name: 'github',

|

||||||

description: 'Popular GitHub repositories',

|

description: 'Top 100 GitHub repositories',

|

||||||

data: {

|

data: {

|

||||||

repositories: githubRepos.slice(0, 200),

|

repositories: githubRepos,

|

||||||

},

|

},

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|||||||

@@ -9,12 +9,10 @@

|

|||||||

|

|

||||||

import type { LanguageModelV2 } from '@ai-sdk/provider'

|

import type { LanguageModelV2 } from '@ai-sdk/provider'

|

||||||

import type { EvaluationResult, Question } from './types'

|

import type { EvaluationResult, Question } from './types'

|

||||||

import { setTimeout } from 'node:timers/promises'

|

|

||||||

import { anthropic } from '@ai-sdk/anthropic'

|

import { anthropic } from '@ai-sdk/anthropic'

|

||||||

import { openai } from '@ai-sdk/openai'

|

import { openai } from '@ai-sdk/openai'

|

||||||

import { generateText } from 'ai'

|

import { generateText } from 'ai'

|

||||||

import { consola } from 'consola'

|

import { consola } from 'consola'

|

||||||

import { RATE_LIMIT_DELAY_MS } from './constants'

|

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Models used for evaluation

|

* Models used for evaluation

|

||||||

@@ -28,11 +26,8 @@ export const models: Record<string, LanguageModelV2> = {

|

|||||||

* Evaluate a single question with a specific format and model

|

* Evaluate a single question with a specific format and model

|

||||||

*/

|

*/

|

||||||

export async function evaluateQuestion(

|

export async function evaluateQuestion(

|

||||||

question: Question,

|

{ question, formatName, formattedData, model}:

|

||||||

formatName: string,

|

{ question: Question, formatName: string, formattedData: string, model: LanguageModelV2 },

|

||||||

formattedData: string,

|

|

||||||

model: LanguageModelV2,

|

|

||||||

modelName: string,

|

|

||||||

): Promise<EvaluationResult> {

|

): Promise<EvaluationResult> {

|

||||||

const prompt = `Given the following data in ${formatName} format:

|

const prompt = `Given the following data in ${formatName} format:

|

||||||

|

|

||||||

@@ -51,10 +46,8 @@ Provide only the direct answer, without any additional explanation or formatting

|

|||||||

temperature: model.modelId.startsWith('gpt-') ? undefined : 0,

|

temperature: model.modelId.startsWith('gpt-') ? undefined : 0,

|

||||||

})

|

})

|

||||||

|

|

||||||

await setTimeout(RATE_LIMIT_DELAY_MS)

|

|

||||||

|

|

||||||

const latencyMs = performance.now() - startTime

|

const latencyMs = performance.now() - startTime

|

||||||

const correct = await validateAnswer({

|

const isCorrect = await validateAnswer({

|

||||||

actual: text.trim(),

|

actual: text.trim(),

|

||||||

expected: question.groundTruth,

|

expected: question.groundTruth,

|

||||||

question: question.prompt,

|

question: question.prompt,

|

||||||

@@ -63,10 +56,10 @@ Provide only the direct answer, without any additional explanation or formatting

|

|||||||

return {

|

return {

|

||||||

questionId: question.id,

|

questionId: question.id,

|

||||||

format: formatName,

|

format: formatName,

|

||||||

model: modelName,

|

model: model.modelId,

|

||||||

expected: question.groundTruth,

|

expected: question.groundTruth,

|

||||||

actual: text.trim(),

|

actual: text.trim(),

|

||||||

correct,

|

isCorrect,

|

||||||

inputTokens: usage.inputTokens,

|

inputTokens: usage.inputTokens,

|

||||||

outputTokens: usage.outputTokens,

|

outputTokens: usage.outputTokens,

|

||||||

latencyMs,

|

latencyMs,

|

||||||

@@ -105,8 +98,6 @@ Respond with only "YES" or "NO".`

|

|||||||

temperature: 0,

|

temperature: 0,

|

||||||

})

|

})

|

||||||

|

|

||||||

await setTimeout(RATE_LIMIT_DELAY_MS)

|

|

||||||

|

|

||||||

return text.trim().toUpperCase() === 'YES'

|

return text.trim().toUpperCase() === 'YES'

|

||||||

}

|

}

|

||||||

catch (error) {

|

catch (error) {

|

||||||

|

|||||||

@@ -3,7 +3,7 @@

|

|||||||

*

|

*

|

||||||

* Handles:

|

* Handles:

|

||||||

* - Statistical analysis

|

* - Statistical analysis

|

||||||

* - Twitter-ready markdown report generation with visual elements

|

* - Markdown report generation with visual elements

|

||||||

* - Per-dataset breakdowns

|

* - Per-dataset breakdowns

|

||||||

* - Cost analysis

|

* - Cost analysis

|

||||||

* - Result file saving

|

* - Result file saving

|

||||||

@@ -28,7 +28,7 @@ export function calculateFormatResults(

|

|||||||

|

|

||||||

return formatNames.map((formatName) => {

|

return formatNames.map((formatName) => {

|

||||||

const formatResults = results.filter(r => r.format === formatName)

|

const formatResults = results.filter(r => r.format === formatName)

|

||||||

const correctCount = formatResults.filter(r => r.correct).length

|

const correctCount = formatResults.filter(r => r.isCorrect).length

|

||||||

const totalCount = formatResults.length

|

const totalCount = formatResults.length

|

||||||

const accuracy = correctCount / totalCount

|

const accuracy = correctCount / totalCount

|

||||||

|

|

||||||

@@ -59,24 +59,17 @@ export function generateMarkdownReport(

|

|||||||

questions: Question[],

|

questions: Question[],

|

||||||

tokenCounts: Record<string, number>,

|

tokenCounts: Record<string, number>,

|

||||||

): string {

|

): string {

|

||||||

const lines: string[] = [

|

|

||||||

'### Retrieval Accuracy',

|

|

||||||

'',

|

|

||||||

]

|

|

||||||

|

|

||||||

const toon = formatResults.find(r => r.format === 'toon')

|

const toon = formatResults.find(r => r.format === 'toon')

|

||||||

const json = formatResults.find(r => r.format === 'json')

|

const json = formatResults.find(r => r.format === 'json')

|

||||||

|

|

||||||

// Model-by-model breakdown with ASCII bars

|

// Build model-by-model breakdown with ASCII bars

|

||||||

const modelCount = Object.keys(models).length

|

const modelCount = Object.keys(models).length

|

||||||

lines.push(`Tested across **${modelCount} ${modelCount === 1 ? 'LLM' : 'LLMs'}** with data retrieval tasks:`, '', '```')

|

|

||||||

|

|

||||||

const modelNames = Object.keys(models)

|

const modelNames = Object.keys(models)

|

||||||

for (let i = 0; i < modelNames.length; i++) {

|

|

||||||

const modelName = modelNames[i]!

|

const modelBreakdown = modelNames.map((modelName, i) => {

|

||||||

const modelResults = formatResults.map((fr) => {

|

const modelResults = formatResults.map((fr) => {

|

||||||

const modelFormatResults = results.filter(r => r.model === modelName && r.format === fr.format)

|

const modelFormatResults = results.filter(r => r.model === modelName && r.format === fr.format)

|

||||||

const correctCount = modelFormatResults.filter(r => r.correct).length

|

const correctCount = modelFormatResults.filter(r => r.isCorrect).length

|

||||||

const totalCount = modelFormatResults.length

|

const totalCount = modelFormatResults.length

|

||||||

const accuracy = totalCount > 0 ? correctCount / totalCount : 0

|

const accuracy = totalCount > 0 ? correctCount / totalCount : 0

|

||||||

|

|

||||||

@@ -88,34 +81,24 @@ export function generateMarkdownReport(

|

|||||||

}

|

}

|

||||||

}).sort((a, b) => b.accuracy - a.accuracy)

|

}).sort((a, b) => b.accuracy - a.accuracy)

|

||||||

|

|

||||||

// Add blank line before model name, except for first model

|

const formatLines = modelResults.map((result) => {

|

||||||

if (i > 0)

|

|

||||||

lines.push('')

|

|

||||||

lines.push(modelName)

|

|

||||||

for (const result of modelResults) {

|

|

||||||

const bar = createProgressBar(result.accuracy, 1, 20)

|

const bar = createProgressBar(result.accuracy, 1, 20)

|

||||||

const accuracyStr = `${(result.accuracy * 100).toFixed(1)}%`.padStart(6)

|

const accuracyStr = `${(result.accuracy * 100).toFixed(1)}%`.padStart(6)

|

||||||

const countStr = `(${result.correctCount}/${result.totalCount})`

|

const countStr = `(${result.correctCount}/${result.totalCount})`

|

||||||

lines.push(` ${result.format.padEnd(12)} ${bar} ${accuracyStr} ${countStr}`)

|

return ` ${result.format.padEnd(12)} ${bar} ${accuracyStr} ${countStr}`

|

||||||

}

|

}).join('\n')

|

||||||

}

|

|

||||||

|

|

||||||

lines.push('```', '')

|

// Add blank line before model name, except for first model

|

||||||

|

return `${i > 0 ? '\n' : ''}${modelName}\n${formatLines}`

|

||||||

|

}).join('\n')

|

||||||

|

|

||||||

// Summary comparison

|

// Build summary comparison

|

||||||

if (toon && json) {

|

const summaryComparison = toon && json

|

||||||

const tokenSavings = ((1 - toon.totalTokens / json.totalTokens) * 100).toFixed(1)

|

? `**Tradeoff:** TOON achieves ${(toon.accuracy * 100).toFixed(1)}% accuracy (vs JSON's ${(json.accuracy * 100).toFixed(1)}%) while using ${((1 - toon.totalTokens / json.totalTokens) * 100).toFixed(1)}% fewer tokens.`

|

||||||

lines.push(

|

: ''

|

||||||

`**Tradeoff:** TOON achieves ${(toon.accuracy * 100).toFixed(1)}% accuracy (vs JSON's ${(json.accuracy * 100).toFixed(1)}%) while using ${tokenSavings}% fewer tokens.`,

|

|

||||||

'',

|

|

||||||

)

|

|

||||||

}

|

|

||||||

|

|

||||||

lines.push('<details>', '<summary><strong>View detailed breakdown by dataset and model</strong></summary>', '', '#### Performance by Dataset', '')

|

|

||||||

|

|

||||||

for (const dataset of datasets) {

|

|

||||||

lines.push(`##### ${dataset.description}`, '')

|

|

||||||

|

|

||||||

|

// Build performance by dataset

|

||||||

|

const datasetBreakdown = datasets.map((dataset) => {

|

||||||

const datasetResults = formatResults.map((fr) => {

|

const datasetResults = formatResults.map((fr) => {

|

||||||

const datasetFormatResults = results.filter(r => r.questionId.includes(dataset.name) || questions.find(q => q.id === r.questionId)?.dataset === dataset.name)

|

const datasetFormatResults = results.filter(r => r.questionId.includes(dataset.name) || questions.find(q => q.id === r.questionId)?.dataset === dataset.name)

|

||||||

if (datasetFormatResults.length === 0)

|

if (datasetFormatResults.length === 0)

|

||||||

@@ -125,7 +108,7 @@ export function generateMarkdownReport(

|

|||||||

if (formatDatasetResults.length === 0)

|

if (formatDatasetResults.length === 0)

|

||||||

return undefined

|

return undefined

|

||||||

|

|

||||||

const correctCount = formatDatasetResults.filter(r => r.correct).length

|

const correctCount = formatDatasetResults.filter(r => r.isCorrect).length

|

||||||

const totalCount = formatDatasetResults.length

|

const totalCount = formatDatasetResults.length

|

||||||

const accuracy = totalCount > 0 ? correctCount / totalCount : 0

|

const accuracy = totalCount > 0 ? correctCount / totalCount : 0

|

||||||

|

|

||||||

@@ -143,7 +126,7 @@ export function generateMarkdownReport(

|

|||||||

}).filter(Boolean) as { format: string, accuracy: number, tokens: number, correctCount: number, totalCount: number }[]

|

}).filter(Boolean) as { format: string, accuracy: number, tokens: number, correctCount: number, totalCount: number }[]

|

||||||

|

|

||||||

if (datasetResults.length === 0)

|

if (datasetResults.length === 0)

|

||||||

continue

|

return ''

|

||||||

|

|

||||||

// Sort by efficiency

|

// Sort by efficiency

|

||||||

datasetResults.sort((a, b) => {

|

datasetResults.sort((a, b) => {

|

||||||

@@ -152,29 +135,24 @@ export function generateMarkdownReport(

|

|||||||

return effB - effA

|

return effB - effA

|

||||||

})

|

})

|

||||||

|

|

||||||

lines.push(

|

const tableRows = datasetResults.slice(0, 6).map(result =>

|

||||||

'| Format | Accuracy | Tokens | Correct/Total |',

|

|

||||||

'|--------|----------|--------|---------------|',

|

|

||||||

)

|

|

||||||

|

|

||||||

for (const result of datasetResults.slice(0, 6)) {

|

|

||||||

lines.push(

|

|

||||||

`| \`${result.format}\` | ${(result.accuracy * 100).toFixed(1)}% | ${result.tokens.toLocaleString()} | ${result.correctCount}/${result.totalCount} |`,

|

`| \`${result.format}\` | ${(result.accuracy * 100).toFixed(1)}% | ${result.tokens.toLocaleString()} | ${result.correctCount}/${result.totalCount} |`,

|

||||||

)

|

).join('\n')

|

||||||

}

|

|

||||||

|

|

||||||

lines.push('')

|

return `

|

||||||

}

|

##### ${dataset.description}

|

||||||

|

|

||||||

// Model breakdown

|

| Format | Accuracy | Tokens | Correct/Total |

|

||||||

lines.push('#### Performance by Model', '')

|

| ------ | -------- | ------ | ------------- |

|

||||||

|

${tableRows}

|

||||||

for (const modelName of Object.keys(models)) {

|

`.trimStart()

|

||||||

lines.push(`##### ${modelName}`, '')

|

}).filter(Boolean).join('\n')

|

||||||

|

|

||||||

|

// Build performance by model

|

||||||

|

const modelPerformance = modelNames.map((modelName) => {

|

||||||

const modelResults = formatResults.map((fr) => {

|

const modelResults = formatResults.map((fr) => {

|

||||||

const modelFormatResults = results.filter(r => r.model === modelName && r.format === fr.format)

|

const modelFormatResults = results.filter(r => r.model === modelName && r.format === fr.format)

|

||||||

const correctCount = modelFormatResults.filter(r => r.correct).length

|

const correctCount = modelFormatResults.filter(r => r.isCorrect).length

|

||||||

const totalCount = modelFormatResults.length

|

const totalCount = modelFormatResults.length

|

||||||

const accuracy = correctCount / totalCount

|

const accuracy = correctCount / totalCount

|

||||||

|

|

||||||

@@ -186,36 +164,55 @@ export function generateMarkdownReport(

|

|||||||

}

|

}

|

||||||

}).sort((a, b) => b.accuracy - a.accuracy)

|

}).sort((a, b) => b.accuracy - a.accuracy)

|

||||||

|

|

||||||

lines.push('| Format | Accuracy | Correct/Total |', '|--------|----------|---------------|')

|

const tableRows = modelResults.map(result =>

|

||||||

|

`| \`${result.format}\` | ${(result.accuracy * 100).toFixed(1)}% | ${result.correctCount}/${result.totalCount} |`,

|

||||||

|

).join('\n')

|

||||||

|

|

||||||

for (const result of modelResults) {

|

return `

|

||||||

lines.push(`| \`${result.format}\` | ${(result.accuracy * 100).toFixed(1)}% | ${result.correctCount}/${result.totalCount} |`)

|

##### ${modelName}

|

||||||

}

|

|

||||||

|

|

||||||

lines.push('')

|

| Format | Accuracy | Correct/Total |

|

||||||

}

|

| ------ | -------- | ------------- |

|

||||||

|

${tableRows}

|

||||||

|

`.trimStart()

|

||||||

|

}).join('\n')

|

||||||

|

|

||||||

// Methodology

|

return `

|

||||||

lines.push(

|

### Retrieval Accuracy

|

||||||

'#### Methodology',

|

|

||||||

'',

|

|

||||||

'- **Semantic validation**: LLM-as-judge validates responses semantically (not exact string matching).',

|

|

||||||

'- **Token counting**: Using `gpt-tokenizer` with `o200k_base` encoding.',

|

|

||||||

'- **Question types**: Field retrieval, aggregation, and filtering tasks.',

|

|

||||||

'- **Real data**: Faker.js-generated datasets + GitHub repositories.',

|

|

||||||

'',

|

|

||||||

'</details>',

|

|

||||||

'',

|

|

||||||

)

|

|

||||||

|

|

||||||

return lines.join('\n')

|

Tested across **${modelCount} ${modelCount === 1 ? 'LLM' : 'LLMs'}** with data retrieval tasks:

|

||||||

|

|

||||||

|

\`\`\`

|

||||||

|

${modelBreakdown}

|

||||||

|

\`\`\`

|

||||||

|

|

||||||

|

${summaryComparison}

|

||||||

|

|

||||||

|

<details>

|

||||||

|

<summary><strong>View detailed breakdown by dataset and model</strong></summary>

|

||||||

|

|

||||||

|

#### Performance by Dataset

|

||||||

|

|

||||||

|

${datasetBreakdown}

|

||||||

|

#### Performance by Model

|

||||||

|

|

||||||

|

${modelPerformance}

|

||||||

|

#### Methodology

|

||||||

|

|

||||||

|

- **Semantic validation**: LLM-as-judge validates responses semantically (not exact string matching).

|

||||||

|

- **Token counting**: Using \`gpt-tokenizer\` with \`o200k_base\` encoding.

|

||||||

|

- **Question types**: Field retrieval, aggregation, and filtering tasks.

|

||||||

|

- **Real data**: Faker.js-generated datasets + GitHub repositories.

|

||||||

|

|

||||||

|

</details>

|

||||||

|

`.trimStart()

|

||||||

}

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Calculate token counts for all format+dataset combinations

|

* Calculate token counts for all format+dataset combinations

|

||||||

*/

|

*/

|

||||||

export function calculateTokenCounts(

|

export function calculateTokenCounts(

|

||||||

formatters: Record<string, (data: any) => string>,

|

formatters: Record<string, (data: unknown) => string>,

|

||||||

): Record<string, number> {

|

): Record<string, number> {

|

||||||

const tokenCounts: Record<string, number> = {}

|

const tokenCounts: Record<string, number> = {}

|

||||||

|

|

||||||

@@ -272,7 +269,7 @@ export async function saveResults(

|

|||||||

}

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Generate visual progress bar using ASCII characters (█ for filled, ░ for empty)

|

* Generate visual progress bar using ASCII characters (`█` for filled, `░` for empty)

|

||||||

*/

|

*/

|

||||||

function createProgressBar(tokens: number, maxTokens: number, width = 30): string {

|

function createProgressBar(tokens: number, maxTokens: number, width = 30): string {

|

||||||

const filled = Math.round((tokens / maxTokens) * width)

|

const filled = Math.round((tokens / maxTokens) * width)

|

||||||

|

|||||||

@@ -18,7 +18,7 @@ export interface EvaluationResult {

|

|||||||

model: string

|

model: string

|

||||||

expected: string

|

expected: string

|

||||||

actual: string

|

actual: string

|

||||||

correct: boolean

|

isCorrect: boolean

|

||||||

inputTokens?: number

|

inputTokens?: number

|

||||||

outputTokens?: number

|

outputTokens?: number

|

||||||

latencyMs: number

|

latencyMs: number

|

||||||

|

|||||||

Reference in New Issue

Block a user